The Historical Growth of Data: Why We Need a Faster Transfer Solution for Large Data Sets

Tracing the History of Data

People have been creating data since we started writing thousands of years ago. Humans developed writing independently in Ancient Sumer around 3200 BCE and Mesoamerica around 600 BCE. Even in this early writing, people recorded trade transactions, presumably because it was important data to have on hand.

Historically, technological inventions and cultural milestones have caused shifts in how much data we create and store. A few notable examples?

- The 15th century invention of the printing press.

- The decrease in the cost of publishing in the late 19th century.

- The era of the “information explosion” in the 1940s when University research libraries started doubling in size in short time span.

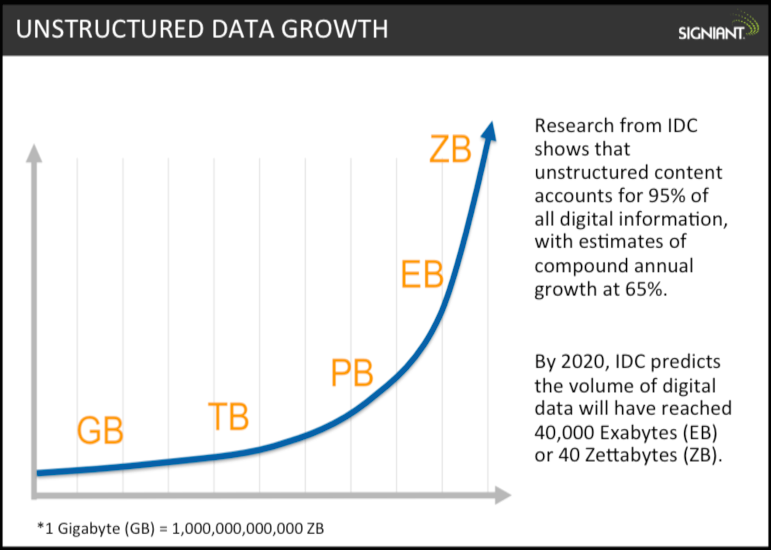

Even the information explosion was a mere drop in the ocean compared to the amount of data created today. According to the ACI Information Group, we created 5 exabytes of content on a daily basis in 2013. That’s the same amount of data that was created from the beginning of the world through to 2003. By 2020, the International Data Corporation (IDC) estimates that the size of world’s digital data will be nearly 40 Zetabytes.

Most of this new content growth (up to 95%) is from unstructured data such as video, images and the myriad types of data that aren’t organized in relational databases. With such exponential growth in unstructured data in many businesses, universities, non-profits and government centers, data growth has outpaced data handling solutions for file transfers and storage.

Standard methods for transferring large files like FTP do not work as well as files get larger, especially anything over about 500MB. So storing, sending, and sharing large data sets has become a problem that technology experts are struggling to solve. What are the challenges that companies face when trying to handle their large, unstructured data sets?

Bandwidth, Latency, and the Problem of Transfer Speed

Even technically capable people have a hard time sending really large data files over IP networks. Why?

Because of the limitations of bandwidth capacity. This has tended to be the most commonly present issue. Many Internet vendors claim that they can speed up file transfers that cover long distances if companies purchase more bandwidth.

Limited by latency issues. However, with protocols that are built on TCP (including FTP and HTTP), the bigger problem is latency. Because of latency, file transfers over long distances using TCP based protocols can only utilize a limited amount of bandwidth. Thus, increasing bandwidth does not speed up transmissions by any effective margin.

Technology solutions that address real speed issues need to address latency issues in order to actually utilize all available bandwidth. Signiant understands the challenges and roadblocks associated with handling large data growth. We helped media companies move massive media files online in the early 2000s, and saw then need for better file transfer solutions for other data-intensive industries. Our software moves data up to 200x faster than TCP based solutions such as FTP.

In order to address the growing need of better data handling for modern businesses, we have developed two large-file transfer SaaS solutions, Media Shuttle and Flight, for person-to-person transfers and cloud storage upload/download respectively. We look to the future with cost-effective technology that can handle data growth and scale with your business.